Understanding Principal Component Analysis

Prev: regularization Next: how-pca-works

A Toy Example

PCA is used to visualize the data in a lower-dimensional space, to understand sources of variability in the data, and to understand correlations between different coordinates of data points.

Take this table, where your friends rate on a scale of 1 to 10, four foods, kale, taco bell, sashimi, and pop tarts.

| kale | taco bell | sashimi | pop tarts | |

|---|---|---|---|---|

| Alice | 10 | 1 | 2 | 7 |

| Bob | 7 | 2 | 1 | 10 |

| Carolyn | 2 | 9 | 7 | 3 |

| Dave | 3 | 6 | 10 | 2 |

There are 4 data points (), and 4 dimensions ().

We can represent the data points thusly:

The average of all data points is the vector:

Where the vectors and are the following:

And each data point can be expressed this way:

And is for Alice, for Bob, etc.

So to calculate Alice’s scores, you would calculate , and for Bob’s scores, .

This is useful to plot the data, and also to interpret the data.

If we look at , we notice that items 1 and 4 are highly correlated, and items 2 and 3 are as well. Thus, we can guess that pairs (1, 4) and (2, 3) are alike, and also (1, 4) and (2, 3) are very unalike.

Since we know that kale salad and pop tarts are vegetarian, and taco bell and sashimi are not, one guess could be that encodes the vegetarian preferences of the person.

As well, could encode the health consciousness of the person, since kale salad and sashimi are more health conscious than pop tarts and taco bell.

Once normalized, the vectors and correspond to the top two principal components of the data. PCA is used to compute approximations of this type automatically, for large data sets.

Goal of PCA

The goal of PCA is to approximately express each of -dimensional vectors as linear combinations of -dimensional vectors , so:

For each . PCA offers a definition of which vectors are the best ones for this purpose.

Relation to Dimensionality Reduction

This is similar to the JL dimensionality reduction technique.

There are a few differences:

- JL dimensionality reduction assumes that you care about the Euclidean distance between each pair of points. PCA offers no guarantees about preserving pairwise distances.

- JL dimensionality reduction is data-oblivious, where the randomly chosen vectors are chosen without looking at the data points. PCA is deterministic, where the point is to compute vectors that explain the dataset.

- The coordinates used in JL have no intinsic meaning, whereas in PCA they do.

- JL only gives good results when is at least in the low hundreds. PCA can give meaningful results even when is 1 or 2, but there are also datasets where it provides little interesting information, for any .

Relation to Linear Regression

Linear Regression and PCA create the best fitting dimensional subspace to a point set. This works when each data point has a label, and there is a relationship between one coordinate to the rest of the coordinates. They are also assumed to be independent of each other.

In PCA, all coordinates are treated equally, and they are not independent from each other.

As well, Linear Regression and PCA have different definitions of best fit.

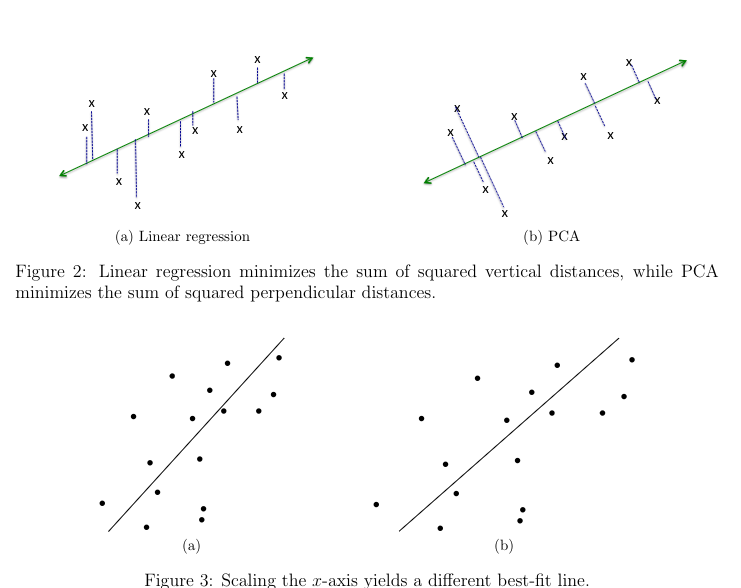

Linear Regression minimizes the total squared error, as that creates a line of best fit that minimizes the sum of squared vertical distances between the line and data points. This is because in linear regression, the coordinate corresponding to labels is the important one.

In PCA, the squared perpendicular distances are minimized, which shows that all coordinates play a symmetric role, and Euclidean distance is most appropriate.

Defining the Problem

Preprocessing

First, preprocess the data by centering points around the origin, so that has an all-zero vector, . This can be done by subtracting each point by the sample mean, .

Afterwards, the data is unshifted. This makes the linear algebra easier to apply.

It is also important to scale each coordinate, so the units each coordinate is measured in does not affect the best-fit line.

One approach involves taking the points centered at the origin, then for every coordinate , divide the th coordinate by the sample deviation, .

If this isn’t done, then the line of best fit changes for a unit, say between kilometers and miles, even though the underlying data is the same, just measured with different units.

The Objective Function

The best fit line for a dataset with a of 1 looks like this:

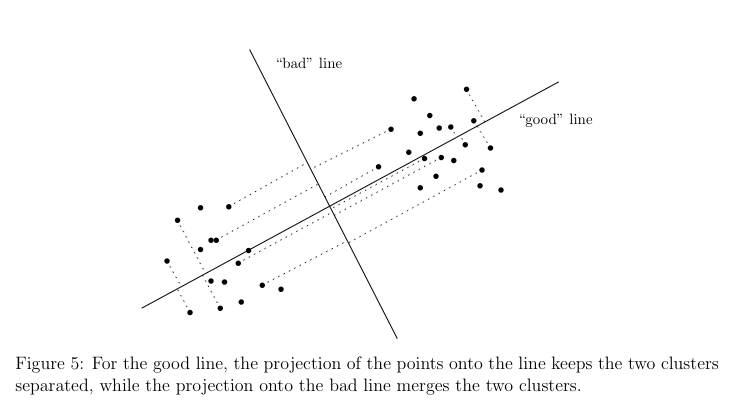

Minimizing the Euclidean distance between points and the chosen line is done above, but also, the distances are squared before adding up. This ensures the best-fit line passes through the origin, and maximizes variance.

Maximizing variance is useful for PCA, since it preserves clusters that are disimilar from each other in higher dimensional space. It should be used for when variance is thought to glean useful information. If variance is just noise, then it won’t be useful.

Larger Values of

For more dimensions than one, the work is similar. The PCA objective funciton becomes:

Recalling that vectors are orthonormal if they have unit length for all i, and are orthogonal for all . Rotating these vectors gives more sets of orthonormal vectors.

The span of a collection of vectors is their linear combinations: . If then this span is a line through the origin. For where and are linearly independent, then the span is a plane through the original.

Orthonormal vectors is that the squared projection length of a point onto the subspace spanned by the vectors is just the sum of squares of its projections onto each of the vectors:

.

Combining the last two equations, we can state the objective of PCA for general in its standard form. Compute orthonormal vectors to maximize:

The right hand side is the squared projection length.

The resulting orthonormal vectors are the top principal components of the data.

More formally:

Use Cases

Data Visualization

PCA is commonly used for data visualization. One typically takes to be 1,2,3. Given data points :

-

Perform PCA to get the top principal components .

-

For each data point , define its -coordinate as , its -coordinate as , etc. This sets coordinates with each data point .

-

Plot the point in as the point .

One way to think about PCA is a method for approximating data points as linear combinations of the same vectors. PCA uses the vectors , and the coordinates to specify the lienar combination of these vectors that most closely approximate . Thus, PCA approximates each as:

Interpreting the Results

Both the and the projections of onto them are interesting.

-

The datapoints with the largest and smallest projections $(x_i,v_1) on the first principal component may suggest a potential meaning for the component. If any data points are clustered together at each of the two ends, they may have something in common that is illuminating.

-

Plot all points according to their coordinate values. See if any clusters pop up at the corners. For example, looking at pairs that have similar second coordinates, it’s possible to get a rough interpretation of the second principal component.

-

The coordinates of a principal component can also be helpful.

Do Genomes Encode Geography?

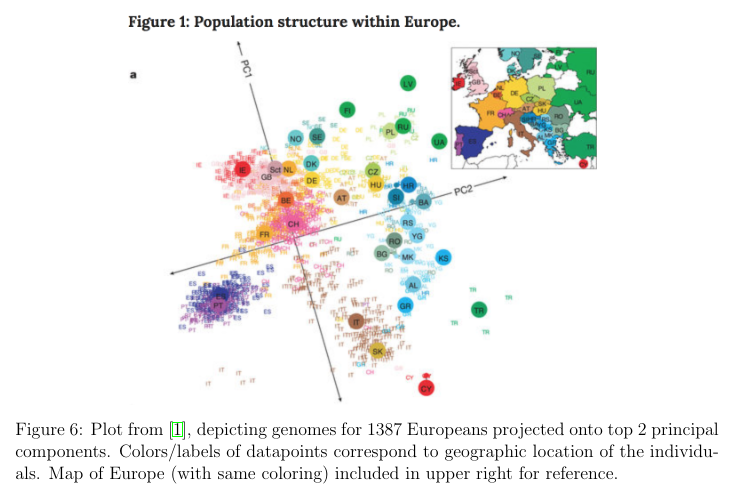

PCA can be used to depict genomes in Europe on two dimensions. 1387 Europeans were analyzed on 200,000 SNPs, which are genomes. So with an , and , with two principal components, and , this result appeared. was the latitude, and was the longitude, rotated 16 degrees.

Interestingly, this only held for Europe, because America was more sensitive to immigration trends.

Data Compression

Another famous application of PCA is the Eigenfaces project. The data points are a bunch of faces, all framed the same way, under the same lighting conditions. Thus, is the number of pixels, 65k. However, the top 100-150 principcal components represents the components with high accuracy.

Failure Cases

-

Scaling/normalization was messed up. PCA is sensitive to different scalings/normalizations, and getting good results from PCA involves choosing an appropriate scaling for the different data coordinates.

-

Non-linear structure. PCA finds a linear structure in the data, so if the data has some low-dimensional, non-linear structure, PCA will not find anything useful.

-

Non-orthogonal structure.

Prev: regularization Next: how-pca-works